BLOG

Activation Functions in Deep Learning

In artificial neural networks(ANN), the activation function helps us to determine the output of Neural Network. They decide whether the neuron should be activated or not. It determines the output of a model, its accuracy, and computational efficiency.

Single neuron structure. Source wiki.tum.de

Inputs are fed into the neurons in the input layer. Inputs(Xi) are then multiplied with their weights(Wi) and add the bias gives the output(y=(Xi*Wi)+b) of the neuron. We apply our activation function on Y then it is transfer to the next layer.

Properties that Activation function should hold?

-

Derivative or Differential: Change in y-axis w.r.t. change in x-axis. It is also known as slope (Back prop)

-

Monotonic function: A function which is either entirely non-increasing or non-decreasing

-

The choice of activation functions in Deep Neural Networks has a significant impact on the training

dynamics and task performance

Most popular activation functions

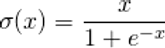

1. Sigmoid function(Logistic function)

It is one of the commonly used activation function. The Sigmoid function was introduced to ANN in the 1990s to replace the Step function.

Sigmoid activation function

The function formula and it derivative.

Sigmoid function and its derivative

In the sigmoid function, we can see that its output is in the open interval (0,1)

-

The output will always ranges between 0 to 1

-

Sigmoid is S-shaped , ‘monotonic’ & ‘differential’ function

-

Derivative of the sigmoid function (f’(x)) will lies between 0 and 0.25

-

The function is differentiable. That means we can find the slope of the sigmoid curve at any two points

-

Natural Language Toolkit – Library for NLP

-

The function is monotonic but the function’s derivative is not

Whenever we try to find the derivative of sigmoid in back propagation the value ranges between 0-0.25. Every activation function has its own pros and cons, sigmoid is not an exception.

Pros:

-

Output values bound between 0 and 1

-

Smooth gradient, preventing “jumps” in output values

Cons:

-

Derivative of sigmoid function suffers “Vanishing gradient”. Looking at the function plot, you can see that when inputs become small or large, the function saturates at 0 or 1, with a derivative extremely close to 0. Thus it has almost no gradient to propagate back through the network, so there is almost nothing left for lower layers. i.e takes time to reach convergence point(global minima)

-

Computationally expensive: The function performs an exponential operation which in result takes more

-

computation time

-

Sigmoid function in “non-zero-centric”. This makes the gradient updates go too far in different directions

0 < output < 1, and it makes optimization harder

Vanishing Gradient. Source click

Vanishing gradient problemis encountered when training artificial neural networks with gradient-based learning methods and backpropagation. In such methods, each of the neural network’s weights receives an update proportional to the partial derivative of the error function with respect to the current weight in each iteration of training. The problem is that in some cases, the gradient will be vanishingly small, effectively preventing the weight from changing its value. In the worst case, this may completely stop the neural network from further training.

2. Tanh Activation Function:

Hyperbolic Tangent(Tanh) similar to sigmoid with slight variation. Basically you can say it overcomes problem which is present in sigmoid.

The plot of the function and its derivative:

Sigmoid activation function

The plot of tanh and its derivative

-

The function is a common S-shaped curve as well

-

The difference is that the output of Tanh is zero centered with a range from -1 to 1

(instead of 0 to 1 in the case of the Sigmoid function)

-

The function is differentiable same as Sigmoid

-

Same as the Sigmoid, the function is monotonic, but the function’s derivative is not

Pros:

-

The whole function is zero-centic which is better than sigmoid

-

Optimization is easy

-

Derivative of tanh function lies between 0 to 1

Tanh tends to make each layer’s output more or less centered around 0 and this often helps speed up convergence

Since, sigmoid and tanh are almost similar they also faces the same problem.

Cons:

-

Derivative of Tanh function suffers “Vanishing gradient”

-

Computationally expensive

It is used for the hidden layer in binary classification problem while sigmoid function is used in the output layer.

3. ReLU( Rectified Linear Units) Activation Function:

This the most popular activation function in Deep learning(used in hidden layer).

f(x) = max(0,x)

ReLU function

This can represented as : ReLU = max(0,x)

If the input is negative the function returns 0, but for any positive input, it returns that value back.

The plot of the function and its derivative:

ReLU function and its derivative. Source here

-

It does not activate all the neurons at the same time

-

ReLU function is non-linear around 0, but the slope is always either 0 (for negative inputs) or 1 (for positive inputs)

Pros:

-

Computationally efficient : The function is very fast to compute (Compare to Sigmoid and Tanh)

it doesn’t calculate exponent

-

Converge very fast

-

Solve gradient saturation problem if input is positive

Cons:

-

ReLU function is not zero-centric

-

The major problem is that it suffers from dying ReLU

Dying ReLU

“Whenever we get the input as negative in ReLU the output will become 0. In back-propagation network doesn’t learn anything(because you can’t backpropagate into it) since it just keeps outputting 0s for the negative input, the gradient descent does not affect it anymore. In other word if the derivative is 0 the whole activation becomes zero hence no contribution of that neuron into the network.”

4. Leaky ReLU:

To overcome the problems in ReLU, Leaky ReLU was introduced. It has all properties and advantages

of ReLU, plus it will never have dying ReLU problem.

Improved version of the ReLU function with introduction of “constant slope”

Leaky ReLU is defined as:

f(x) = max(αx, x) ,where α is small value usually 0.01

We give some small positive alpha value so that whole activation will not becomes zero. The hyperparameter α defines how much the function leaks. It is the slope of the function for x < 0 and is typically set to 0.01. The small slope ensures that Leaky ReLU never dies.

Leaky ReLU and its derivatives. Source click

Pros:

-

It Address the problem of dying neuron/dead neuron

-

Introduced a slope, small α value ensures that neurons never dies i.e helps neuron to “stay alive”

-

It allows negative value during back propagation

Cons:

-

Prone to Vanishing gradient

-

Computationally intensive

5. Exponential Linear Units(ELU):

ELU is also proposed to solve the problems of ReLU. It is variation of ReLU with a better output. ELU outperformed all the ReLU variants.

f(x)= |x x>0 |

|α(e^x -1) x<=0 |

ELU Function. Source click

ELU tends to converge cost to zero faster and produce more accurate results. ELU has a extra alpha(α) constant which should be positive number.

ELU function and deivative. Source click

ELU modified the slope of the negative part of the function. Instead of a straight line, ELU uses a log curve for the negative values. While training faster convergence rate but computation is slow due to the use of the exponential function.

Pros:

-

No Dead ReLU issues

-

Solve the problem of dying neuron

-

Zero-centered output

Cons:

-

Computationally intensive

-

Slow convergence due to exponential function

6. Parametric ReLU(PReLU):

The idea of leaky ReLU can be extended even further. Instead

of multiplying x with a constant term we can multiply it with

a hyperparameter which seems to work better than the leaky

ReLU. This extension to leaky ReLU is known as Parametric ReLU.

ReLU function

Here α is authorized to be learned during training (instead of being a hyperparameter, it becomes a parameter that

can be modified by backpropagation like any other parameters). This outperform ReLU on large image datasets,

but on smaller datasets it overfits the training set.

ReLU vs. PReLU. Source click

In the negative region, PReLU has a small slope, which can also avoid the problem of dying ReLU. Although the slope is small, it does not tend to 0, which is an advantage.

We look at the formula of PReLU. The parameter α is relatively small generally a number between 0 and 1. yᵢ is any input on the ith channel and αᵢ is the negative slope which is a learnable parameter. If α=0 then PReLU becomes ReLU. While the positive part is linear, the negative part of the function adaptively learns during the training phase.

-

If αᵢ=0, f becomes ReLU

-

If αᵢ>0, f becomes leaky ReLU

-

If αᵢ is a learnable parameter, f becomes PReLU

Pros:

-

Avoid the problem of dying neuron

-

Perform better with Large datasets

-

Added parameter α(which controls the negative slope) that can be modified while backpropagation

Cons:

-

Though the lower bound parameter α induce variation, the one-sided saturation doesn’t lead to better saturation

7. Softmax:

Softmax function calculates the probabilities distribution of the event over ‘n’ different events. In general way of saying, this function will calculate the probabilities of each target class over all possible target classes(which helps for determining the target class).

Softmax function. Source click

Softmax ensures that smaller values have a smaller probability and will not be discarded directly. It is a “max” that is “soft”.

It returns the probability for a datapoint belonging to each individual class. Note that the sum of all the values is 1

Softmax can be described as the combination of multiple sigmoid function.

Softmax normalizes an input value into a vector of values that follows a probability distribution whose total sums up to 1.

The output values are between the range [0,1] which is nice because we are able to avoid binary classification

and accommodate as many classes or dimensions in our neural network model.

For the multiclass problem, the output layer will have as many neuron as the target class. Typically it is used on output layer for NN to classify inputs into multiple categories.

For example, suppose you have 4 class[A,B,C,D]. There would be 4 neurons in the output layer. Assume you got output

from the neurons as[2.5,5.7,1.6,4.3]. After applying softmax function you will get [0.26,0.14,0.41,0.19]. These represent

the probability for the data point belonging to each class. By seeing the probability value we can say input belong to class C.

8. Swish Function:

Swish function is known as a self-gated activation function, has recently been released by researchers

at Google. Mathematically it is represented as

Swish function

According to the paper , the SWISH activation function performs better than ReLU.

Swish activation function. source click

From the figure, we can observe that in the negative region of the x-axis the shape of the tail is different from the ReLU activation function and because of this the output from the Swish activation function may decrease even when the input value increases. Most activation functions are monotonic, i.e., their value never decreases as the input increases. Swish has one-sided boundedness property at zero, it is smooth and is non-monotonic.

The formula is :

f(x) = x*sigmoid(x)

From the research paper their experiments show that Swish tends to work better than ReLU on deeper models

across a number of challenging data sets. For example, simply replacing ReLUs with Swish units improves top-1 classification accuracy on ImageNet by 0.9% for Mobile NASNetA and 0.6% for Inception-ResNet-v2.

The simplicity of Swish and its similarity to ReLU make it easy for practitioners to replace ReLUs with Swish

units in any neural network.

-

Swish activation function f(x)= x*sigmoid(x)

-

The curve of Swish is a smooth : which makes it less sensitive to initializing weights and learning rate.

It plays important role in generalization and optimization

-

Non-monotonic function that consistently matches or outperforms ReLU

-

It is unbounded above(which makes it useful near the gradients with values near to 0. This feature avoids Saturation as training becomes slow near 0 gradient values) and bounded below(helps in strong regularization, and larger negative inputs will be resolved)

-

It is the non-monotonic attribute that actually creates the difference

-

With self-gating, it requires just a scalar input whereas in multi-gating scenario, it would require multiple two-scalar input. It has been inspired by the use of Sigmoid function in LSTM (Hochreiter & Schmidhuber, 1997) and Highway networks (Srivastava et al., 2015) where ‘self-gated’ means that the gate is actually the ‘sigmoid’ of activation itself

-

Unboundedness is helpful to prevent gradient from gradually approaching 0 during slow training

9. Softplus:

It is similar to the ReLU function, but it is relatively smoother. Function of Softplus:

f(x) = ln(1+exp x)

Softplus activation function, Source click

Derivative of the Softplus function is

f’(x) is logistic function (1/(1+exp x))

Function has wide acceptance ranges from (0, + inf).

Both the ReLU and Softplus are largely similar, except near 0 where the softplus is enticingly smooth and differentiable.

It is much easier and efficient to compute ReLU and its derivative than for the softplus function which has log and exp.

Softplus activation function, Source click

Summary for all the activation in these images. Have a look and recollect all the thinks whatever we have learn so far.

Activation functions. Source click

How to decide which one to choose or which is the right one?

Selection of activation functions is critical. So, which one to select!! There are no statement which indicates which activation function one can select. Each activation function as its own pro’s and con’s. All the good and bad will

be decided based on the trail and error.

But based on the properties of the problem we might able to make a better choice for easy and quicker convergence

of the network.

-

Sigmoid functions and their combinations generally work better in the case of classification problems. But it suffers from Vanishing gradient

-

We generally use ReLU in hidden layer

-

In case of dead neurons leaky ReLU function can be use

-

PReLU if you have a huge training set

-

Softmax is used in output layer for classification problem(mostly multiclass)

-

Convergence and computation is the tradeoff

Vivek patel

Data Scientist

at Kushagramati